Introduction

AWS Lambda has already revolutionized the way we approach serverless architecture, however, it is not done yet! This article introduces you to LLRT (Low Latency Runtime) for JavaScript, a new approach to enhancing the speed and efficiency of JavaScript Lambda functions.

What does "enhancing speed" mean? It means up to 10x faster startup and 2x lower cost. You will see later on 😉

Yes, you can breathe a sigh of relief, you don't need to learn Rust... yet 👀

After discussing LLRT and AWS Lambda, we are going to deploy it with Terraform. I think this is the first project that deploys LLRT with Terraform. If you are interested in other Infrastructure as Code tools, then you can skip that chapter.

Understanding LLRT for JavaScript

LLRT (Low Latency Runtime) is a lightweight JavaScript runtime that addresses the demand for fast and efficient serverless applications.

Right now LLRT is experimental, although Richard Davison, the creator of LLRT, during a stream for the Believe In Serverless community, says they are trying to release a stable version by the end of 2024.

LLRT is built in Rust and uses QuickJS as its JavaScript engine to ensure efficient memory usage and startup time. Yeah Rust, but we won't need it because we are going to use good ol' JavaScript, or even TypeScript for that matter.

LLRT is not a replacement for Node.js. In fact, it only supports a fraction of Node.js APIs. Here we can find what is supported and what's not. Given the fact that it doesn't support everything, we cannot use some lovely packages like Middy or Powertools. I know, it's a bummer, BUT the community already has issues open:

- Middy: https://github.com/middyjs/middy/issues/1181

- Powertools: https://github.com/aws-powertools/powertools-lambda-typescript/issues/2050

I hope LLRT is able to support more APIs in the future so we can work with these libraries ✌️

There are many scenarios where LLRT can make a difference:

- Latency-critical applications

- Functions with high volume

- Data transformation

- AWS service integrations

- Server-side rendering (SSR) for React applications

Personally, I'm really looking forward to a stable release, as many of my projects meet these requirements just fine 👌

Using LLRT to Improve AWS Lambda Function Efficiency

README.md 👇

Before we start with everything else, I want to highlight one key factor: LLRT won't replace our AWS Lambda functions.

LLRT is designed to work in conjunction with our existing infrastructure, it won't be a replacement for all of our AWS Lambda functions.

The world is evolving, as it was 50 years ago, and it still is. We need to adapt and tackle new challenges along the way. In our specific case, this means that we will have better programming languages in the future, hence, we need to adapt! The way I see it, having a multi-programming language codebase will be essential for performance improvement.

I'll be sure to create my own tools to switch runtimes as needed. Additionally, with LLRT, the transition should be smooth, so I'm very excited to test it out in real-world scenarios.

Setting Up The Environment

Before deploying AWS Lambda with Terraform, we need to go through a checklist of prerequisites:

- AWS account with credentials on your machine

- Terraform installed

See? Easy! No need to roll your eyes 🙄

As you can imagine, we are going to use Terraform for AWS Lambda deployment. For that, we have Terraform deployment scripts that bundle the code, dependencies, and the LLRT binary, and ship everything to the AWS cloud.

Ready?! 🚀

Building the Low Latency Runtime (LLRT)

Enhancing AWS Lambda performance using LLRT has never been that easy! Before we start the building process, we need to download the binary file for LLRT, Here's the link to download it from.

This binary file is all we need to bundle for our AWS Lambda optimization.

After that, we can bundle our application code. To do that, I've created a not-so-pretty bash script that:

- does a few checks here and there to ensure everything is aligned

- installs node modules

- downloads the binary file from GitHub

- starts the bundling process with esbuild

And after all of that, the code gets zipped and shipped 🚀

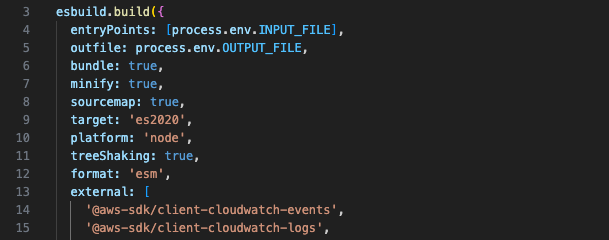

I want to highlight this esbuild configuration:

esbuild.build({ entryPoints: [process.env.INPUT_FILE], outfile: process.env.OUTPUT_FILE, bundle: true, minify: true, sourcemap: true, target: 'es2020', platform: 'node', treeShaking: true, format: 'esm', external: [ '@aws-sdk', '@smithy', 'uuid', ], });

Here we set the format as ESM, that's because LLRT only supports this format.

During this project, I was really struggling to make it work. At one point, I was watching Julian Wood's webinar about AWS Lambda Internals, and I don't know, it must have been his voice or his influence. Ten minutes in, my code started working and I fully deployed my first LLRT Lambda with Terraform 🥹

The AWS Lambda function code is just standard code. I've just initialized a few libraries from

@aws-sdk⚠️ Note: LLRT doesn't support every @aws-sdk v3 library, it only support a fraction of them, see here for the full list.

Deploying with Terraform

Terraform is a great Infrastructure as Code (IaC) tool, and enterprises love it. Unfortunately it hasn't received a lot of love from the Serverless community because, honestly, it's difficult to develop and test serverless architectures with Terraform.

Anyway, there are many tutorials out there on how to deploy LLRT with SAM or CDK. I wanted to add a few bits with Terraform, as many of my enterprise projects are written in Terraform.

Let's look at a step-by-step guide to deploying AWS Lambda with Terraform:

- Create an execution role with basic permissions

- Create a null_resourceto invoke our build.sh script

- Add an archive_file to zip the /dist directory

- Create a null_resource to invoke cleanup.sh script (to clean the /dist folder)

- Create aws_lambda_function with runtime provided.al2023 and reference the archive_file resource

- Profit! 💯

And here's an efficient AWS Lambda deployment using Terraform ✌️

After all of that (link to the repository down below), we can deploy with these commands:

npm install terraform init terraform plan terraform apply

Voilà 🎉

Testing and Monitoring

Sooooooo this is the moment we are all waiting for, isn't it?

First, let's look at the AWS Lambda performance for Node.js 20:

- 600ms of init duration (or cold start ❄️)

- 75ms of duration

- 95MB of memory used

- 110ms of init duration (do we still need to call it a "cold" start?)

- 1.4ms of duration

- 25MB of memory used

This is a simple hello world example, it's not a real-world scenario. Nevertheless, we can see a 6x improvement in init duration, 50x in duration, and almost 4x less memory used.

Pretty impressive for a "hello world" test.

Anyway, this article is about how to optimize AWS Lambda functions with LLRT and deploy it with Terraform, and I think we hit the nail right on the head ✌️

Congratulations! You've won $100 in AWS credits! 🎉 You might be early, or you might be late, who knows?! Check out the image below 👇 Redeem your credits here: https://aws.amazon.com/awscredits/

Best Practices

We saw how we can speed up AWS Lambda functions with LLRT. Before ending, I wanted to highlight a few AWS Lambda performance tips and tricks I added to this project:

- Enable tree-shaking: no one wants dead code, right?!

- Minify your code: it's a bit controversial, but I think if we want to squeeze every few milliseconds out there, we need to minify the code.

- Bundle your code with the @aws-sdk: better safe than sorry later 😉

These are just a few AWS Lambda function best practices. There are many more. If you want to have a look, I have some articles talking about them on my blog.

Conclusion

And there you have it, folks. We learned about LLRT and also how to deploy it with Terraform. It was a fun project and gave us the chance to learn more about how it works with our deployment.

Adding to that a few performance tuning tips for AWS Lambda with LLRT, we end up with a nice proof of concept (POC) for when LLRT goes live 🚀

Looking ahead, I'm excited about the potential of LLRT to enhance our serverless architectures even further. As it evolves and matures, LLRT promises to play a significant role in improving the efficiency and speed of our applications.

We just need to wait and see. Let's wait together and explore more articles about LLRT, shall we?!

Here's the link to my GitHub repository: https://github.com/Depaa/lambda-llrt-terraform 😉

If you enjoyed this article, please let me know in the comment section or send me a DM. I'm always happy to chat! ✌️

Thank you so much for reading! 🙏 Keep an eye out for more AWS-related posts, and feel free to connect with me on LinkedIn 👉 https://www.linkedin.com/in/matteo-depascale/.

References

- https://www.terraform.io/

- https://github.com/awslabs/llrt

- https://docs.aws.amazon.com/lambda/latest/dg/welcome.html

Disclaimer: opinions expressed are solely my own and do not express the views or opinions of my employer.